如何快速實現REST API集成以優化業務流程

復制上圖的token,然后執行下面的代碼,并粘貼到執行如下代碼的文本框中:

from huggingface_hub import notebook_login

notebook_login()%%capture

!sudo apt -qq install git-lfs

!git config --global credential.helper storeimport numpy as np

import torch

import torch.nn.functional as F

from matplotlib import pyplot as plt

from PIL import Image

def show_images(x):

"""給定一批圖像,創建一個網格并將其轉換為PIL"""

x = x * 0.5 + 0.5 # 將(-1,1)區間映射回(0,1)區間

grid = torchvision.utils.make_grid(x)

grid_im = grid.detach().cpu().permute(1, 2, 0).clip(0, 1) * 255

grid_im = Image.fromarray(np.array(grid_im).astype(np.uint8))

return grid_im

def make_grid(images, size=64):

"""給定一個PIL圖像列表,將它們疊加成一行以便查看"""

output_im = Image.new("RGB", (size * len(images), size))

for i, im in enumerate(images):

output_im.paste(im.resize((size, size)), (i * size, 0))

return output_im

# 對于Mac,可能需要設置成device = 'mps'(未經測試)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")? ? ? ?DreamBooth可以對Stable Diffusion進行微調,并在整個過程中引入特定的面部、物體或者風格等額外信息。我們可以初步體驗一下Corridor Crew使用DreamBooth制作的視頻(https://www.bilibili.com/video/BV18o4y1c7R7/?vd_source=c5a5204620e35330e6145843f4df6ea4),目前模型以及集成到Huggingface上,下面是加載的代碼:

from diffusers import StableDiffusionPipeline

# https://huggingface.co/sd-dreambooth-library ,這里有來自社區的各種模型

model_id = "sd-dreambooth-library/mr-potato-head"

# 加載管線

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_

dtype=torch.float16). to(device)prompt = "an abstract oil painting of sks mr potato head by picasso"

image = pipe(prompt, num_inference_steps=50, guidance_scale=7.5).

images[0]

image生成的圖像如下圖所示:

num_inference_steps:表示采樣步驟的數量;

guidance_scale:決定模型的輸出與Prompt之間的匹配程度;

import torchvision

from datasets import load_dataset

from torchvision import transforms

dataset = load_dataset("huggan/smithsonian_butterflies_subset",

split="train")

# 也可以從本地文件夾中加載圖像

# dataset = load_dataset("imagefolder", data_dir="path/to/folder")

# 我們將在32×32像素的正方形圖像上進行訓練,但你也可以嘗試更大尺寸的圖像

image_size = 32

# 如果GPU內存不足,你可以減小batch_size

batch_size = 64

# 定義數據增強過程

preprocess = transforms.Compose(

[

transforms.Resize((image_size, image_size)), # 調整大小

transforms.RandomHorizontalFlip(), # 隨機翻轉

transforms.ToTensor(), # 將張量映射到(0,1)區間

transforms.Normalize([0.5], [0.5]), # 映射到(-1, 1)區間

]

)

def transform(examples):

images = [preprocess(image.convert("RGB")) for image in

examples["image"]]

return {"images": images}

dataset.set_transform(transform)

# 創建一個數據加載器,用于批量提供經過變換的圖像

train_dataloader = torch.utils.data.DataLoader(

dataset, batch_size=batch_size, shuffle=True

)可視化其中部分數據集

xb = next(iter(train_dataloader))["images"].to(device)[:8]

print("X shape:", xb.shape)

show_images(xb).resize((8 * 64, 64), resample=Image.NEAREST)# 輸出

X shape: torch.Size([8, 3, 32, 32])

? ? ? ?在訓練擴散模型和使用擴散模型進行推理時都可以由調度器(scheduler)來完成,噪聲調度器能夠確定在不同迭代周期分別添加多少噪聲,通常可以使用如下兩種方式來添加噪聲,代碼如下:

# 僅添加了少量噪聲

# 方法一:

# noise_scheduler = DDPMScheduler(num_train_timesteps=1000, beta_

# start=0.001, beta_end=0.004)

# 'cosine'調度方式,這種方式可能更適合尺寸較小的圖像

# 方法二:

# noise_scheduler = DDPMScheduler(num_train_timesteps=1000,

# beta_schedule='squaredcos_cap_v2')參數說明:

beta_start:控制推理階段開始時beta的值;

beta_end:控制推理階段結束時beta的值;

beta_schedule:可以通過一個函數映射來為模型推理的每一步生成一個beta值

? ? ? 無論選擇哪個調度器,我們都可以通過noise_scheduler.add_noise為圖片添加不同程度的噪聲,代碼如下:

timesteps = torch.linspace(0, 999, 8).long().to(device)

noise = torch.randn_like(xb)

noisy_xb = noise_scheduler.add_noise(xb, noise, timesteps)

print("Noisy X shape", noisy_xb.shape)

show_images(noisy_xb).resize((8 * 64, 64), resample=Image.NEAREST)# 輸出

Noisy X shape torch.Size([8, 3, 32, 32])

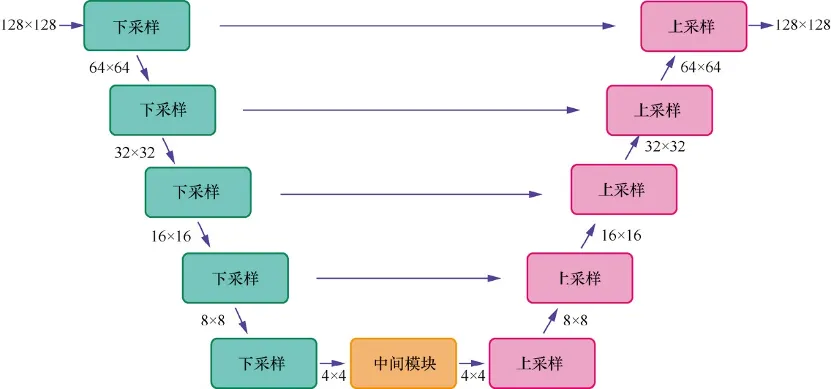

? ? ? ?下面是Diffusers的核心概念-模型介紹,本文采用UNet,模型結構如圖所示:

??下面代碼中down_block_types對應下采樣模型(綠色部分),up_block_types對應上采樣模型(粉色部分)

from diffusers import UNet2DModel

# 創建模型

model = UNet2DModel(

sample_size=image_size, # 目標圖像分辨率

in_channels=3, # 輸入通道數,對于RGB圖像來說,通道數為3

out_channels=3, # 輸出通道數

layers_per_block=2, # 每個UNet塊使用的ResNet層數

block_out_channels=(64, 128, 128, 256), # 更多的通道→更多的參數

down_block_types=(

"DownBlock2D", # 一個常規的ResNet下采樣模塊

"DownBlock2D",

"AttnDownBlock2D", # 一個帶有空間自注意力的ResNet下采樣模塊

"AttnDownBlock2D",

),

up_block_types=(

"AttnUpBlock2D",

"AttnUpBlock2D", # 一個帶有空間自注意力的ResNet上采樣模塊

"UpBlock2D",

"UpBlock2D", # 一個常規的ResNet上采樣模塊

),

)

model.to(device);# 設定噪聲調度器

noise_scheduler = DDPMScheduler(

num_train_timesteps=1000, beta_schedule="squaredcos_cap_v2"

)

# 訓練循環

optimizer = torch.optim.AdamW(model.parameters(), lr=4e-4)

losses = []

for epoch in range(30):

for step, batch in enumerate(train_dataloader):

clean_images = batch["images"].to(device)

# 為圖片添加采樣噪聲

noise = torch.randn(clean_images.shape).to(clean_images.

device)

bs = clean_images.shape[0]

# 為每張圖片隨機采樣一個時間步

timesteps = torch.randint(

0, noise_scheduler.num_train_timesteps, (bs,),

device=clean_images.device

).long()

# 根據每個時間步的噪聲幅度,向清晰的圖片中添加噪聲

noisy_images = noise_scheduler.add_noise(clean_images,

noise, timesteps)

# 獲得模型的預測結果

noise_pred = model(noisy_images, timesteps, return_

dict=False)[0]

# 計算損失

loss = F.mse_loss(noise_pred, noise)

loss.backward(loss)

losses.append(loss.item())

# 迭代模型參數

optimizer.step()

optimizer.zero_grad()

if (epoch + 1) % 5 == 0:

loss_last_epoch = sum(losses[-len(train_dataloader) :]) /

len(train_dataloader)

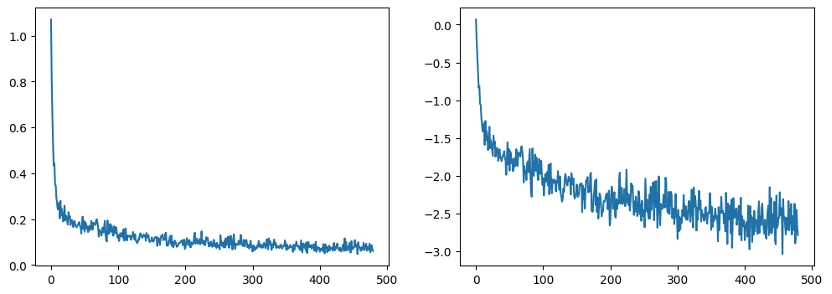

print(f"Epoch:{epoch+1}, loss: {loss_last_epoch}")我們繪制一下訓練過程中損失變化:

fig, axs = plt.subplots(1, 2, figsize=(12, 4))

axs[0].plot(losses)

axs[1].plot(np.log(losses))

plt.show()

4.5 生成圖像

我們使用如下兩種方法來生成圖像。

方法一:建立一個Pipeline

from diffusers import DDPMPipeline

image_pipe = DDPMPipeline(unet=model, scheduler=noise_scheduler)

pipeline_output = image_pipe()

pipeline_output.images[0]保存Pipeline到本地文件夾

image_pipe.save_pretrained("my_pipeline")我們查看一下my_pipeline文件夾里面保存了什么?

!ls my_pipeline/

# 輸出

model_index.json scheduler unet?? ?scheduler和unet兩個子文件夾包含了生成圖像所需的全部組件,其中unet子文件夾包含了描述模型結構的配置文件config.json和模型參數文件diffusion_pytorch_model.bin

!ls my_pipeline/unet/

# 輸出

config.json diffusion_pytorch_model.bin我們只需要將 scheduler和unet兩個子文件上傳到Huggingface Hub中,就可以實現模型共享。

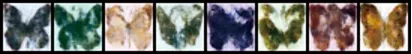

方法二:加入時間t進行循環采樣

? ? ? ? 我們按照不同時間步t進行逐步采樣,我們看一下生成的效果,代碼如下:

# 隨機初始化(8張隨機圖片)

sample = torch.randn(8, 3, 32, 32).to(device)

for i, t in enumerate(noise_scheduler.timesteps):

# 獲得模型的預測結果

with torch.no_grad():

residual = model(sample, t).sample

# 根據預測結果更新圖像

sample = noise_scheduler.step(residual, t, sample).prev_sample

show_images(sample)

我們定義上傳模型的名稱,名稱會包含用戶名,代碼如下:

from huggingface_hub import get_full_repo_name

model_name = "sd-class-butterflies-32"

hub_model_id = get_full_repo_name(model_name)

hub_model_id# 輸出

Arron/sd-class-butterflies-32在Huggingface Hub上創建一個模型倉庫并將其上傳,代碼如下:

from huggingface_hub import HfApi, create_repo

create_repo(hub_model_id)

api = HfApi()

api.upload_folder(

folder_path="my_pipeline/scheduler", path_in_repo="",

repo_id=hub_model_id

)

api.upload_folder(folder_path="my_pipeline/unet", path_in_repo="",

repo_id=hub_model_id)

api.upload_file(

path_or_fileobj="my_pipeline/model_index.json",

path_in_repo="model_index.json",

repo_id=hub_model_id,

)# 輸出

https://huggingface.co/Arron/sd-class-butterflies-32/blob/main/model_index.json創建一個模型卡片以便描述模型的細節,代碼如下:

from huggingface_hub import ModelCard

content = f"""

---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# 這個模型用于生成蝴蝶圖像的無條件圖像生成擴散模型

'''python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('{hub_model_id}')

image = pipeline().images[0]

image

"""

card = ModelCard(content) card.push_to_hub(hub_model_id)# 輸出

https://huggingface.co/Arron/sd-class-butterflies-32/blob/main/README.md??至此,我們已經把自己訓練好的模型上傳到Huggingface Hub了,下面就可以使用DDPMPipeline的from_pretrained方法來加載模型了:

from diffusers import DDPMPipeline

image_pipe = DDPMPipeline.from_pretrained(hub_model_id)

pipeline_output = image_pipe()

pipeline_output.images[0]文章轉自微信公眾號@ArronAI