GraphRAG:基于PolarDB+通義千問api+LangChain的知識圖譜定制實踐

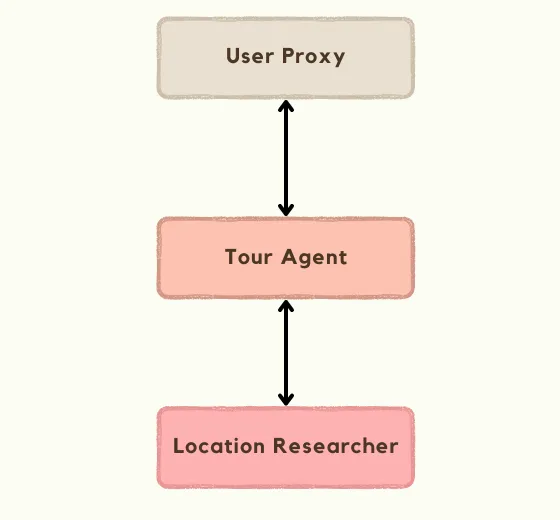

然后,創建兩個助理代理:Tour Agent和Location Researcher。

Tour Agent是一個簡單的Assistant Agent,具有一個自定義的系統提示,用于描述其角色和職責,它指定代理應如何將TERMINATE添加到針對用戶的最終回答的末尾。

tour_agent = AssistantAgent(

"tour_agent",

human_input_mode="NEVER",

llm_config={

"config_list": config_list,

"cache_seed": None

},

system_message="You are a Tour Agent who helps users plan a trip based on user requirements. You can get help from the Location Researcher to research and find details about a certain location, attractions, restaurants, accommodation, etc. You use those details a answer user questions, create trip itineraries, make recommendations with practical logistics according to the user's requirements. Report the final answer when you have finalized it. Add TERMINATE to the end of this report."

)另一方面,在創建Location Researcher時,定義一個函數,它可以調用并執行來搜索谷歌地圖。將在下一節中介紹該函數的實際schema和具體實現。下面代碼片段顯示了如何通過自定義提示將它們附加到Assistant Agent。

location_researcher = AssistantAgent(

"location_researcher",

human_input_mode="NEVER",

system_message="You are the location researcher who is helping the Tour Agent plan a trip according to user requirements. You can use the search_google_maps function to retrieve details about a certain location, attractions, restaurants, accommodation, etc. for your research. You process results from these functions and present your findings to the Tour Agent to help them with itinerary and trip planning.",

llm_config={

"config_list": config_list,

"cache_seed": None,

"functions": [

SEARCH_GOOGLE_MAPS_SCHEMA,

]

},

function_map={

"search_google_maps": search_google_maps

}

)然后,創建User Proxy。盡管User Proxy在該代理系統中沒有發揮積極作用,但它對于接受用戶消息和在向用戶發送響應之前檢測何時結束對用戶查詢的回復序列至關重要。

def terminate_agent_at_reply(

recipient: Agent,

messages: Optional[List[Dict]] = None,

sender: Optional[Agent] = None,

config: Optional[Any] = None,

) -> Tuple[bool, Union[str, None]]:

return True, None

user_proxy = UserProxyAgent(

"user_proxy",

is_termination_msg=lambda x: "TERMINATE" in x.get("content", ""),

human_input_mode="NEVER",

code_execution_config=False

)

user_proxy.register_reply([Agent, None], terminate_agent_at_reply)我已經向User Proxy注冊了一個新的回復函數,它只返回True和None輸出。要了解如何結束聊天序列的,必須了解Autogen是如何使用回復功能的。

當代理生成回復時,Autogen依賴于注冊到代理的回復函數列表。它接受這個列表中的第一個函數,如果它能生成最終的回復,則返回True,reply。如果返回False,表示函數無法生成回復,將轉移到列表中的下一個函數。

Autogen支持代理不同的回復方式,例如請求人工反饋、執行代碼、執行函數或生成LLM回復。

當我將terminate_agent_at_reply注冊為回復函數時,它會被添加到此列表的開頭,并成為第一個被調用的回復函數。由于默認情況下返回True、None,這將阻止用戶代理發送自動回復或使用其他回復功能生成LLM回復。使用“None”作為回復會阻止群聊繼續進行更多的聊天回合。

最后,我將創建允許所有這些代理進行協作的群聊和管理代理。

group_chat = GroupChat(

agents=[self.user_proxy, self.location_researcher, self.tour_agent],

messages=[],

allow_repeat_speaker=False,

max_round=20

)

group_chat_manager = GroupChatManager(

self.group_chat,

is_termination_msg=lambda x: "TERMINATE" in x.get("content", ""),

llm_config={

"config_list": config_list,

"cache_seed": None

}

)在這里,我允許群聊使用默認的發言人選擇方法,auto,因為這個用例沒有其他合適的選項。我還設置了聊天管理器的is_terminate_msg參數,以檢查消息內容中是否存在terminate。

那么,當我已經為用戶代理使用了以前的terminate_at_agent_reply函數時,為什么我要在這里設置另一個終止條件呢?

如果LLM在Tour agent的最終回答后選擇用戶代理以外的代理作為下一個發言人,它應該起到故障保護的作用。

4.5 把上述功能合并到一個class里

現在,我可以把所有這些代理邏輯放在一個類中,還介紹了一種方法來接受來自API的用戶消息,并在回復序列之后發送最終回復。

import os

from autogen import AssistantAgent, UserProxyAgent, GroupChat, GroupChatManager, Agent

from typing import Optional, List, Dict, Any, Union, Callable, Literal, Tuple

from dotenv import load_dotenv

from functions import search_google_maps, SEARCH_GOOGLE_MAPS_SCHEMA

load_dotenv()

config_list = [{

'model': 'gpt-3.5-turbo-1106',

'api_key': os.getenv("OPENAI_API_KEY"),

}]

class AgentGroup:

def __init__(self):

self.user_proxy = UserProxyAgent(

"user_proxy",

is_termination_msg=lambda x: "TERMINATE" in x.get("content", ""),

human_input_mode="NEVER",

code_execution_config=False

)

self.user_proxy.register_reply([Agent, None], AgentGroup.terminate_agent_at_reply)

self.location_researcher = AssistantAgent(

"location_researcher",

human_input_mode="NEVER",

system_message="You are the location researcher who is helping the Tour Agent plan a trip according to user requirements. You can use the search_google_maps function to retrieve details about a certain location, attractions, restaurants, accommodation, etc. for your research. You process results from these functions and present your findings to the Tour Agent to help them with itinerary and trip planning.",

llm_config={

"config_list": config_list,

"cache_seed": None,

"functions": [

SEARCH_GOOGLE_MAPS_SCHEMA,

]

},

function_map={

"search_google_maps": search_google_maps

}

)

self.tour_agent = AssistantAgent(

"tour_agent",

human_input_mode="NEVER",

llm_config={

"config_list": config_list,

"cache_seed": None

},

system_message="You are a Tour Agent who helps users plan a trip based on user requirements. You can get help from the Location Researcher to research and find details about a certain location, attractions, restaurants, accommodation, etc. You use those details a answer user questions, create trip itineraries, make recommendations with practical logistics according to the user's requirements. Report the final answer when you have finalized it. Add TERMINATE to the end of this report."

)

self.group_chat = GroupChat(

agents=[self.user_proxy, self.location_researcher, self.tour_agent],

messages=[],

allow_repeat_speaker=False,

max_round=20

)

self.group_chat_manager = GroupChatManager(

self.group_chat,

is_termination_msg=lambda x: "TERMINATE" in x.get("content", ""),

llm_config={

"config_list": config_list,

"cache_seed": None

}

)

def process_user_message(self, message: str) -> str:

self.user_proxy.initiate_chat(self.group_chat_manager, message=message, clear_history=False)

return self._find_last_non_empty_message()

def _find_last_non_empty_message(self) -> str:

conversation = self.tour_agent.chat_messages[self.group_chat_manager]

for i in range(len(conversation) - 1, -1, -1):

if conversation[i].get("role") == "assistant":

reply = conversation[i].get("content", "").strip()

reply = reply.replace("TERMINATE", "")

if reply:

return reply

return "No reply received"

@staticmethod

def terminate_agent_at_reply(

recipient: Agent,

messages: Optional[List[Dict]] = None,

sender: Optional[Agent] = None,

config: Optional[Any] = None,

) -> Tuple[bool, Union[str, None]]:

return True, None這里,每當代理組接收到用戶消息時,用戶代理都會啟動與組管理器的聊天,其中clear_history=False,可以保留以前回復序列的歷史記錄。

回復序列結束后,find_last_non_empty_message會從聊天記錄中找到旅行社代理發送的最后一條非空消息,并將其作為答案返回。該函數在尋找應該返回答案時,會考慮到與代理回復和回復序列的一些不一致性。

? ? ? ? ?現在,我將創建API端點來接收FastAPI用戶查詢。

from fastapi import FastAPI

from pydantic import BaseModel

from typing import Dict

from agent_group import AgentGroup

class ChatRequest(BaseModel):

session_id: str

message: str

app = FastAPI()

sessions: Dict[str, AgentGroup] = {}

@app.post("/chat")

def chat(request: ChatRequest):

session_id = request.session_id

message = request.message

if session_id not in sessions.keys():

sessions[session_id] = AgentGroup()

agent_group = sessions[session_id]

reply = agent_group.process_user_message(message)

return {"reply": reply, "status": "success"}最后,回過頭來處理在前一步中遺漏的內容:Location Researcher使用的函數調用的模式和實現。

import os

from serpapi import GoogleSearch

from dotenv import load_dotenv

from typing import Dict

load_dotenv()

SEARCH_GOOGLE_MAPS_SCHEMA = {

"name": "search_google_maps",

"description": "Search google maps using Google Maps API",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "A concise search query for searching places on Google Maps"

}

},

"required": ["query"]

}

}

def search_google_maps(query):

params = {

"engine": "google_maps",

"q": query,

"type": "search",

"api_key": os.getenv("SERP_API_KEY")

}

results = _search(params)

results = results["local_results"]

top_results = results[:10] if len(results) > 10 else results

data = []

for place in top_results:

data.append(_populate_place_data(place["place_id"]))

return data

def _populate_place_data(place_id: str):

params = {

"engine": "google_maps",

"type": "place",

"place_id": place_id,

"api_key": os.getenv("SERP_API_KEY")

}

data = _search(params)

return _prepare_place_data(data["place_results"])

def _prepare_place_data(place: Dict):

return {

"name": place.get("title"),

"rating": place.get("rating"),

"price": place.get("price"),

"type": place.get("type"),

"address": place.get("address"),

"phone": place.get("phone"),

"website": place.get("website"),

"description": place.get("description"),

"operating_hours": place.get("operating_hours"),

"amenities": place.get("amenities"),

"service_options": place.get("service_options")

}

def _search(params: Dict[str, str]):

search = GoogleSearch(params)

results = search.get_dict()

return resultssearch_google_maps函數獲取代理提交的搜索查詢,并將其發送到SERP的google maps API。然后,它使用位置ID檢索結果中前10個位置的更多詳細信息。最后,它使用這些詳細信息創建一個簡化的對象并將其發送回。

終于到了運行這個應用程序的時候了,看看它的效果如何。

我正在嘗試一個例子,用戶發送一個請求來計劃去巴厘島旅行的行程。

Create a week long itinerary to Ubud, Bali for myself for May 2024. I’m going solo and I love exploring nature and going on hikes and activities like that. I have a mid-level budget and I want the itinerary to be relaxing not too packed.

旅行社代理考慮了這一請求,并多次聯系位置研究員,以獲取島上景點、餐廳和住宿選擇的詳細信息。

旅行社代理根據這些信息提供最終答案。然后,用戶對這個答案給出更多的反饋,使用更多的請求繼續進行聊天。

在上述的Tour Agent系統中,成功地配置了Autogen,以更好地適應基于API的應用程序,在該應用程序中,內部代理通信對用戶是隱蔽的。然而,它仍然有一些缺點和不可預測的行為。

例如:

盡管這些缺點對于這樣的用例來說并不重要,但對于另一個場景來說可能會有所不同。隨著群聊中代理數量的增加,這些問題也變得更加明顯。

但是,如果你想為代理群聊帶來更多的可預測性和一致性,仍然有一些方法可以定制Autogen的內置行為。

Autogen GroupChat類包含一個select_speaker_msg方法,可以覆蓋該方法以指定如何管理發言人選擇。

這是原始提示(供參考)。

def select_speaker_msg(self, agents: List[Agent]) -> str:

"""Return the system message for selecting the next speaker. This is always the *first* message in the context."""

return f"""You are in a role play game. The following roles are available:

{self._participant_roles(agents)}.

Read the following conversation.

Then select the next role from {[agent.name for agent in agents]} to play. Only return the role."""您可以更新此信息,具體說明在何種情況下選擇哪種代理,以使演講者選擇更加一致。

此筆記本[2]顯示了如何將所有允許的說話者轉換路徑定義為有向圖,并覆蓋GroupChat的select_speaker方法來強制執行該行為。例如,對于這個用例,可以創建這樣一個簡單的圖。

這些只是幾個例子。最終,適合您的解決方案將取決于您的特定需求。你還必須在提示和路由方面發揮創意,以確保所有代理的行為都符合你的意愿。

[1] https://levelup.gitconnected.com/harnessing-the-power-of-autogen-multi-agent-systems-via-api-integration-edb0b9651608

[2] https://github.com/microsoft/autogen/blob/main/notebook/agentchat_graph_modelling_language_using_select_speaker.ipynb

文章轉自微信公眾號@ArronAI