Inference is where AI goes to work.

Our custom LPU? is built for this phase—developed in the U.S. with a resilient supply chain for consistent performance at scale.

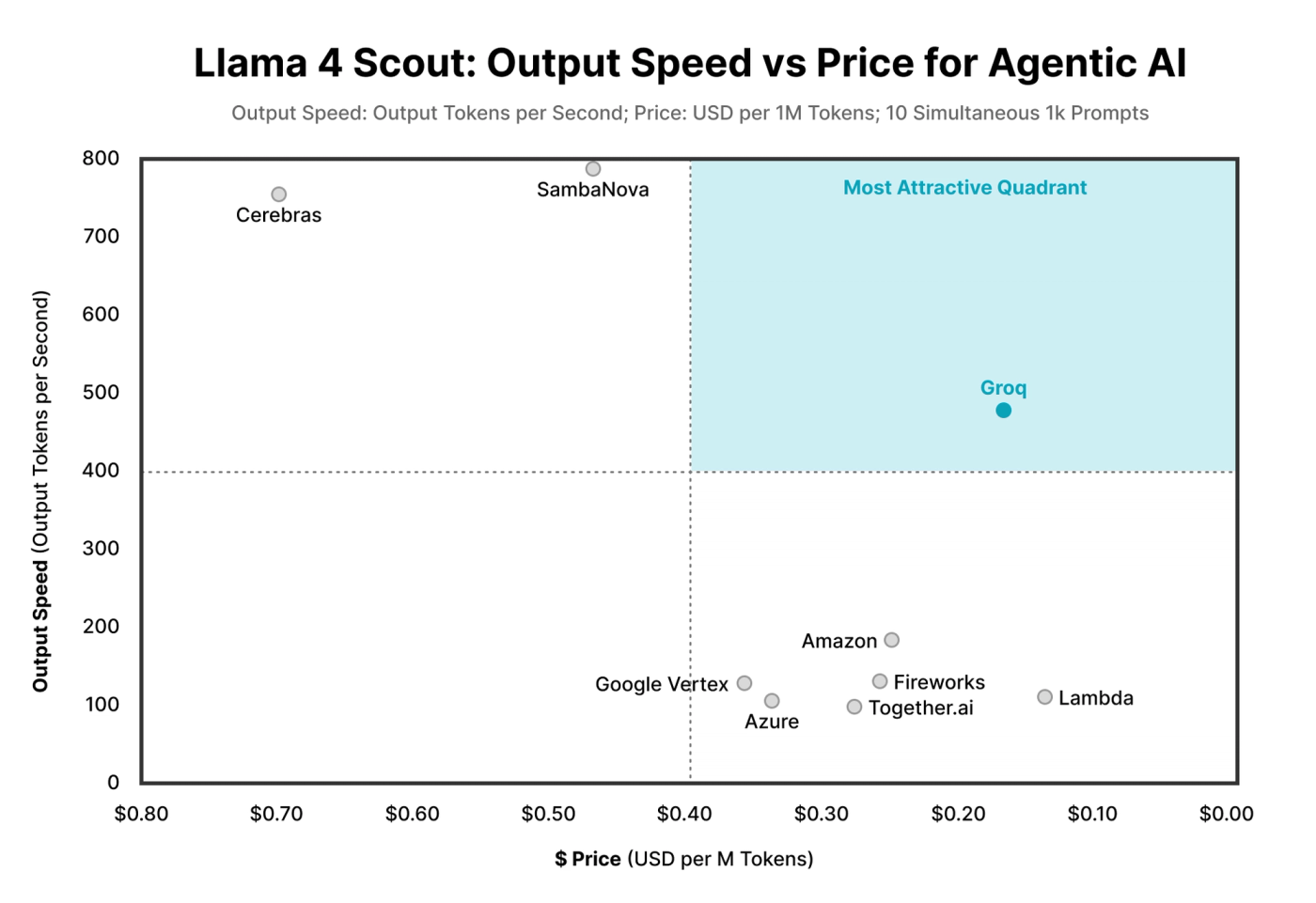

It powers GroqCloud?, a full-stack platform for fast, affordable, production-ready inference.